Civs Rot

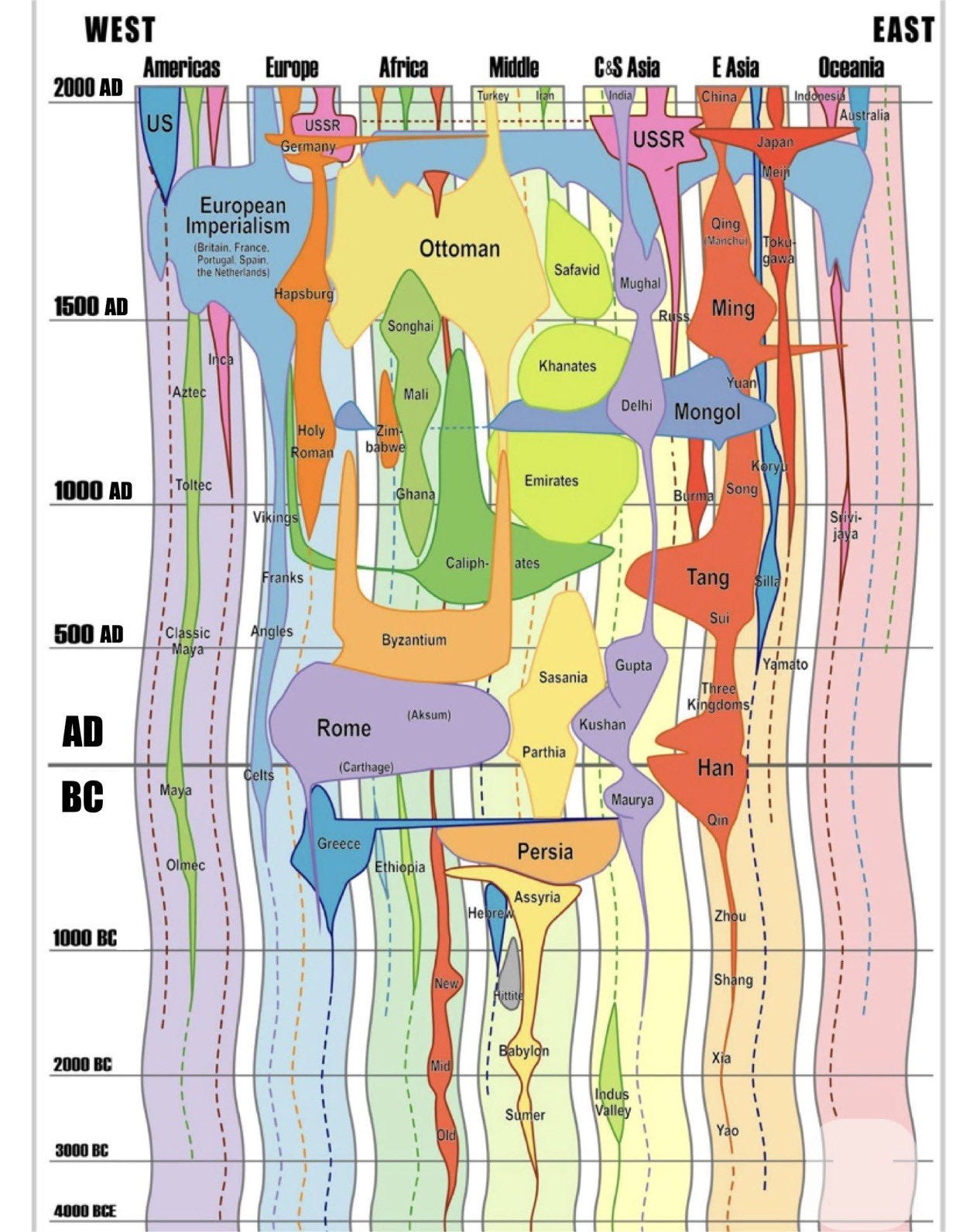

Why do civilizations (civs) rise and fall? A great many explanations have been offered, usually blaming civ falls on something concrete that the author doesn’t like about our current civ, to warn us against it. Like say promiscuity, civil war, or inequality. But let me consider a rather abstract empirical approach.

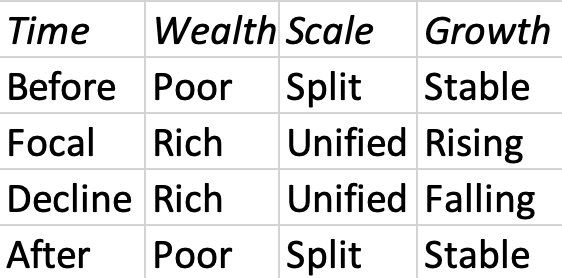

What is the key pattern of the rise and fall of civs? Seems to me that we see a four step pattern in time. This pattern comes initially from identifying a focal rare historical period with an impressive civ, and then looking at both what happened before and after that focal period. The focal period is characterized by these key features: rich, unified, and rising. When we go back far enough before then to find things being different, we find a before period characterized by: poor, split, stable. And when we go forward in time far enough to find things being different, we first find a decline period characterized by: rich, unified and falling. Then after that we find an after period that looks like the before period: poor, split, stable.

What can we infer from this four step time pattern in these three key features of wealth, scale, and growth? As the before period has been selected on the basis of a rare focal period, we can expect that a selection effect dominates its relation to the focal period. This can plausibly explain why all three key features are better in the focal period, compared to the before period. Some other factors, call them “culture”, happened to get better, causing the key features to get better. This transition suggests a tendency for all these features to be correlated.

No such selection effect, however, would mess with the relation between the focal period and the decline and after periods. And the only difference in key features between the focal and the decline periods is the change in growth from rising to falling. We can thus infer that this change in the growth rate was accompanied by a decline in those other “culture” features for which we credited the rise. And we can also infer that this causal channel from culture to growth rate during this period was not mediated by wealth and scale, as those stayed the same here.

In the last transition, from decline to after, wealth and scale get worse, but growth gets better. So the pattern of the last two transitions in our four step time pattern suggest that changes in growth tend to precede changes in wealth and scale.

Also, there’s something about low wealth and scale that is good for culture.

The causal pathways tend to go from culture to growth to wealth and scale, and that last causal step involves a substantial time delay. But there’s also something about about low wealth and scale that tends to heal cultures, and so plausibly also something about high wealth and scale that tends to hurt cultures.

So the overall story that we can infer here is this: other “culture” features tend (with noise) to cause the key features of wealth, scale, and growth. Sometimes cultures get unusually lucky and get “good”, which tends to grow all three of these key features at once. Then later these “culture” features go “bad”, which first causes the growth rate to get worse, and then with a delay the other key features get worse. But finally when key features are bad culture heals.

This seems to me to be a simple story of civ rot, via culture rot. Wealth, unity, and growth are downstream from culture, which on rare occasions gets luckily good, but after that there’s a “regression to the mean”, and culture consistently tends to go bad, first causing growth to decline, and later wealth and scale to decline.

This seems analogous to the known pattern for corporate cultures today, which tend to consistently go bad, causing firms to go broke, even when strongly incentivized CEOs try hard to prevent that decline.

Cultural drift is one possible mechanism by which exceptionally good cultures could tend to go bad, both in firms and in civs. High wealth and scale could cause lower rates of local cultural variety and selection pressures, and higher rates of context change and internal cultural change.

"Cultural drift is one possible mechanism by which exceptionally good cultures could tend to go bad, both in firms and in civs."

Another mechanism I have personally lived through at firms is when you get big and successful, there is a tendency to assume your success is due to a good culture, so why change anything? The culture gets cocky and stops bringing in new ideas, and once the cultural immune system shuts off, cancers begin to take root. Andy Grove famously said only the paranoid survive, but the real question is how does one remain paranoid in the midst of success.

Does this happen to civilizations as a whole? Maybe. I instinctively distrust any group or culture that is too self-assured. That is always the step before the downfall.

"We can thus infer that this change in the growth rate was accompanied by a decline in those other “culture” feature for which we credited the rise. And we can also infer that this causal channel from culture to growth rate during this period was not mediated by wealth and scale, as those stayed the same here.."

We say "rise and fall", not "rise and decline", because the rise is much slower than the fall. This is the most-crucial observation, I think. It suggests we look to catastrophe theory.

There is no reason to think that the change from growth to decline should be attributed to a change of some system parameter from increasing to decreasing. I'm pretty confident that's usually not the case in complex systems. The system moves through its phase space as many parameters change simultaneously, and typically a catastrophe happens when one variable, the one we identify as "growth", increases too rapidly with respect to some other compensating variable, even though that compensating variable might also be growing. The collapse isn't caused by a single variable or parameter.

Most of what we measure, e.g., an economy's GDP, are variables, not parameters. Parameters govern functions; functions compute variables from parameters and variables. A collapse means a sudden fall in some variable for which more is better. But that variable is probably the output of a complex function of other variables. Collapse is not caused by a change from increasing to decreasing in a parameter, but in a /mismatch/ between sets of variables, where "mismatch" means "a combination which results in some function producing a value below rather than above a threshold."

For instance, in the Lotka-Volterra predator-prey model, there are a predictable series of rises and sudden collapses in predator population, even while the system parameters (prey and predator reproduction rate, predator starvation rate, & predation efficiency) remain constant. The sudden collapse in predator population happens because the predator /population growth/ rate (not reproduction rate) is a function of the prey population divided by the predator population, and the first derivative of the predator growth rate turns negative when the ratio of those two variables falls below some function of the system parameters.

A theory I've had for decades to explain civilizational collapse is that evolution causes a system to become more-stable as it grows, but engineering causes a system to become less-stable as it grows.

Growth of any kind, population, wealth, territory, whatever, makes a system more complex. Random growth in one area nearly always makes a stable system less stable. So it's surprising that evolution makes a system more-stable as it grows.

The reason engineering destabilizes it is also mathematically complicated, but less-complicated: Evolved systems have catastrophes--let's call them avalanches, because I got this idea from avalanche theory circa 1990--with a power law size distribution.

- The size S of an avalanche is the number of things that are perturbed by the avalanche, like the number of grains of sand in a sandpile avalanche.

- The fraction of avalanches of size S is proportional to 1/S^a.

- If S is discrete (avalanche sizes are integers), then

-- If a > 1, the average avalanche size is finite.

-- If a < 1, the average avalanche size is infinite.

- If S avalanche sizes are real numbers, then

-- If a > 2, the average avalanche size is finite.

-- If a < 2, the average avalanche size is infinite.

One of the many problems with engineering society is that humans always think linearly. Whenever an equation comes up in life, humans assume it's either linear, or constant, or just "True" or "False". Humans don't intuitively grasp power laws.

When re-engineering society, humans who aren't religious zealots usually try to make it bigger and better, which means more-complicated. Smart engineers can see that these new complications introduce new failure modes. They try to predict how often a system with the proposed change will fail, and how badly it will fail. And they nearly always do this using some linear model, like asking "how many avalanches of size > S will occur per century" or "what is the expected time to an avalanche of size S." Then they engineer the system to get a failure rate that's acceptable /under that linear model/.

The society they're engineering, having evolved, is /always already near a cusp catastrophe/ at every control point. That is, lots of things in it already have a power-law distribution, including the failure they're trying to guard against. Things with power-law distributions are very safe in the short-term, because the risk is all concentrated in the long-term. Planners looking ahead 1 or 10 or 100 years will inevitably engineer something that will have a system-destroying catastrophe after that lookahead time.

If the failures have integer sizes, they might actually do this right! Because then they can use a linear model. But even then, the max avalanche size is typically REALLY BIG, like $15 trillion (in the NYSE), or 350 million (people). So the math gets done right only if you use a truncated power law and take the sum all the way out to that big number; and the bigger the number is, the less-stable your estimate is. So even then you should really be engineering in an /a/ > 2, which you can't do with linear thinking.