Em Software Results

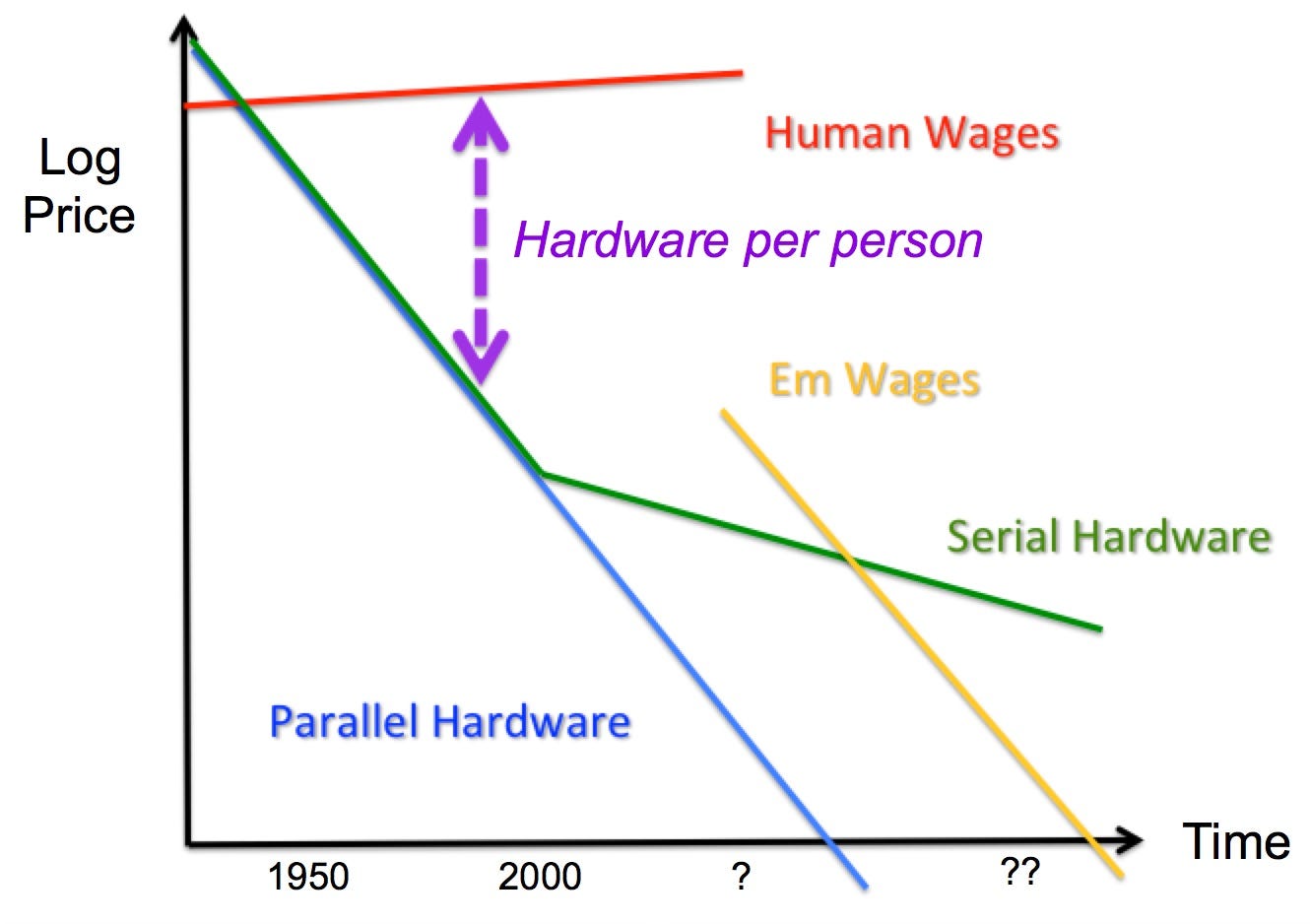

After requesting your help, I should tell you what it added up to. The following is an excerpt from my book draft, illustrated by this diagram:

In our world, the cost of computing hardware has been falling rapidly for decades. This fall has forced most computer projects to be short term, so that products can be used before they are made obsolete. The increasing quantity of software purchased has also led to larger software projects, which involve more engineers. This has shifted the emphasis toward more communication and negotiation, and also more modularity and standardization in software styles.

The cost of hiring human software engineers has not fallen much in decades. The increasing divergence between the cost of engineers and the cost of hardware has also lead to a decreased emphasis on raw performance, and increased emphasis on tools and habits that can quickly generate correct if inefficient performance. This has led to an increased emphasis on modularity, abstraction, and on high-level operating systems and languages. High level tools insulate engineers more from the details of hardware, and from distracting tasks like type checking and garbage collection. As a result, software is less efficient and well-adapted to context, but more valuable overall. An increasing focus on niche products has also increased the emphasis on modularity and abstraction.

Em software engineers would be selected for very high productivity, and use the tools and styles preferred by the highest productivity engineers. There would be little interest in tools and methods specialized to be useful “for dummies.” Since em computers would tend to be more reversible and error-prone, em software would be more focused on those cases as well. Because the em economy would be larger, its software industry would be larger as well, supporting more specialization.

The transition to an em economy would greatly lower wages, thus inducing a big one-time shift back toward an emphasis on raw context-dependent performance, relative to abstraction and easier modifiability. The move away from niche products would add to this tendency, as would the ability to save copies of the engineer who just wrote the software, to help later with modifying it. On the other hand, a move toward larger software projects could favor more abstraction and modularity.

After the em transition, the cost of em hardware would fall at about the same speed as the cost of other computer hardware. Because of this, the tradeoff between performance and other considerations would change much less as the cost of hardware fell. This should greatly extend the useful lifetime of programming languages, tools, and habits matched to particular performance tradeoff choices.

After an initial period of large rapid gains, the software and hardware designs for implementing brain emulations would probably reach diminishing returns, after which there would only be minor improvements. In contrast, non-em software will probably improve about as fast as computer hardware improves, since algorithm gains in many areas of computer science have for many decades typically remained close to hardware gains. Thus after ems appear, em software engineering and other computer-based work would slowly get more tool-intensive, with a larger fraction of value added by tools. However, for non-computer-based tools (e.g., bulldozers) their intensity of use and the fraction of value added by such tools would probably fall, since those tools probably improve less quickly than would em hardware.

For over a decade now, the speed of fast computer processors has increased at a much lower rate than the cost of computer hardware has fallen. We expect this trend to continue long into the future. In contrast, the em hardware cost will fall with the cost of computer hardware overall, because the emulation of brains is a very parallel task. Thus ems would see an increasing sluggishness of software that has a large serial component, i.e., which requires many steps to be taken one after the other, relative to more parallel software. This sluggishness would directly reduce the value of such software, and also make such software harder to write.

Thus over time serial software will become less valuable, relative to ems and parallel software. Em software engineers would come to rely less on software tools with a big serial component, and would instead emphasize parallel software, and tools that support that emphasis. Tools like automated type checking and garbage collection would tend to be done in parallel, or not at all. And if it ends up being too hard to write parallel software, then the value of software more generally may be reduced relative to the value of having ems do tasks without software assistance.

For tasks where parallel software and tools suffice, and where the software doesn’t need to interact with slower physical systems, em software engineers could be productive even when sped up to the top cheap speed. This would often make it feasible to avoid the costs of coordinating across engineers, by having a single engineer spend an entire subjective career creating a large software system. For an example, an engineer that spent a subjective century at one million times human speed would be done in less than one objective hour. When such a short delay is acceptable, parallel software could be written by a single engineer taking a subjective lifetime.

When software can be written quickly via very fast software engineers, product development could happen quickly, even when very large sums were spent. While today investors may spend most of their time tracking current software development projects, those who invest in em software projects of this sort might spend most of their time deciding when is the right time to initiate such a project. A software development race, with more than one team trying to get to market first, would only happen if the same sharp event triggered more than one development effort.

A single software engineer working for a lifetime on a project could still have troubles remembering software that he or she wrote decades before. Because of this, shorter-term copies of this engineer might help him or her to be more productive. For example, short-term em copies might search for and repair bugs, and end or retire once they have explained their work to the main copy. Short-term copies could also search among many possible designs for a module, and end or retire after reporting on their best design choice, to be re-implemented by the main copy. In addition, longer-term copies could be created to specialize in whole subsystems, and younger copies could be revived to continue the project when older copies reached the end of their productive lifetime. These approaches should allow single em software engineers to create far larger and more coherent software systems within a subjective lifetime.

Fast software engineers who focus on taking a lifetime to build a large software project, perhaps with the help of copies of themselves, would likely develop more personal and elaborate software styles and tools, and rely less on tools and approaches that help them to coordinate with other engineers with differing styles and uncertain quality. Such lone fast engineers would require local caches of relevant software libraries. When in distantly separated locations, such caches could get out of synch. Local copies of library software authors, available to update their contributions, might help reduce this problem. Out of synch libraries would increase the tendency toward divergent personal software styles.

When different parts of a project require different skills, a lone software engineer might have different young copies trained with different skills. Similarly, young copies could be trained in the subject areas where some software is to be applied, so that they can better understand what variations will have value there.

However, when a project requires different skills and expertise that is best matched to different temperaments and minds, then it may be worth paying extra costs of communication to allow different ems to work together on a project. In this case, such engineers would likely promote communication via more abstraction, modularity, and higher level languages and module interfaces. Such approaches also become more attractive when outsiders must test and validate software, to certify its appropriateness to customers. Enormous software systems could be created with modest sized teams working at the top cheap speed, with the assistance of many spurs. There may not be much need for even larger software teams.

The competition for higher status among ems would tend to encourage faster speeds than would otherwise be efficient. This tendency of fast ems to be high status would tend to raise the status of software engineers.

Have you thought/written about what kind of socio-computational infrastructure would be practically required to support the em economy?

> Thus over time serial software will become less valuable, relative to ems and parallel software.

Ems are just a special category of parallel software, right? What kind of software are current human programmers?

By historical standards, 50 is gigantic. In the 1950s, I don't think there were even 50 full-time programmers in the entire country. By the 70s, a typical programming team still consisted of one or two people. Team sizes in the single digits were standard practice as recently as the early 90s.