Slowing Computer Gains

Whenever I see an article in the popular sci/tech press on the long term future of computing hardware, it is almost always on quantum computing. I’m not talking about articles on smarter software, more robots, or putting chips on most objects around us; those are about new ways to use the same sort of hardware. I’m talking about articles on how the computer chips themselves will change.

This quantum focus probably isn’t because quantum computing is that important to the future of computing, nor because readers are especially interested in distant futures. No, it is probably because quantum computing is sexy in academia, appearing often in top academic journals and university press releases. After all, sci/tech readers mainly want to affiliate with impressive people, or show they are up on the latest, not actually learn about the universe or the future.

If you search for “future of computing hardware”, you will mostly find articles on 3D hardware, where chips are in effect layered directly in top of one another, because chip makers are running into limits to making chip features smaller. This makes sense, as that seems the next big challenge for hardware firms.

But in fact the rest of the computer world is still early in the process of adjusting to the last big hardware revolution: parallel computing. Because of dramatic slowdowns in the last decade of chip speed gains, the computing world must get used to writing a lot more parallel software. Since that is just harder, there’s a real economic sense in which computer hardware gains have slowed down lately.

The computer world may need to make additional adaptations to accommodate 3D chips, as just breaking a program into parallel processes may not be enough; one may also have to to keep relevant memory closer to each processor to achieve the full potential of 3D chips. The extra effort to go into 3D and make these adaptations suggests that the rate of real economic gains from computer hardware will slow down yet again with 3D.

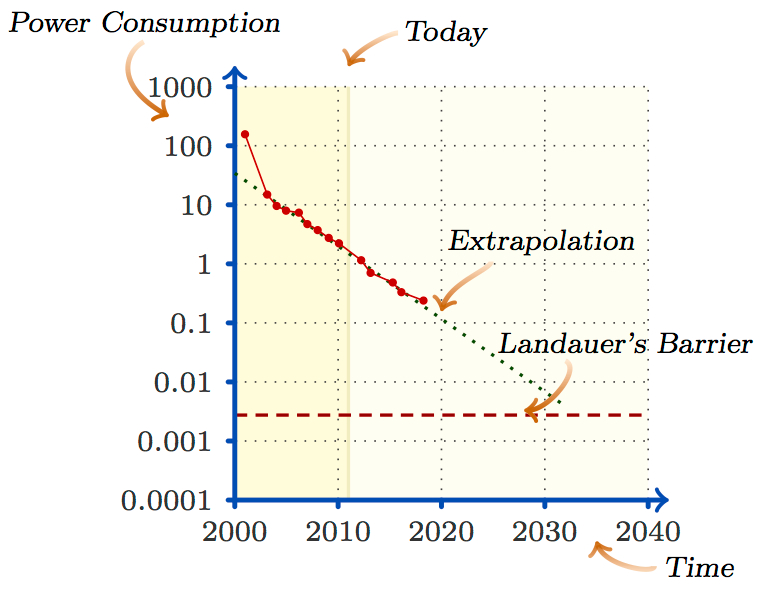

Somewhere around 2035 or so, an even bigger revolution will be required. That is about when the (free) energy used per gate operations will fall to the level thermodynamics says is required to erase a bit of information. After this point, the energy cost per computation can only fall by switching to “reversible” computing designs, that only rarely erase bits. See (source):

Computer operations are irreversible, and use (free) energy to in effect erase bits, when they lack a one-to-one mapping between input and output states. But any irreversible mapping can be converted to a reversible one-to-one mapping by saving its input state along with its output state. Furthermore, a clever fractal trick allows one to create a reversible version of any irreversible computation that takes exactly the same time, costing only a logarithmic-in-time overhead of extra parallel processors and memory to reversibly erase intermediate computing steps in the background (Bennett 1989).

Computer gates are usually designed today to change as rapidly as possible, and as a result in effect irreversibly erase many bits per gate operation. To erase fewer bits instead, gates must be run “adiabatically,” i.e., slowly enough so key parameters can change smoothly. In this case, the rate of bit erasure per operation is proportional to speed; run a gate twice as slowly, and it erases only half as many bits per operation (Younis 1994).

Once reversible computing is the norm, gains in making more smaller faster gates will have to be split, some going to let gates run more slowly, and the rest going to more operations. This will further slow the rate at which the world gains more economic value from computers. Sometime much further in the future, quantum computing may be feasible enough so it is sometimes worth using special quantum processors inside larger ordinary computing systems. Fully quantum computing is even further off.

My overall image of the future of computing is of continued steady gains at the lowest levels, but with slower rates of economic gains after each new computer hardware revolution. So the “effective Moore’s law” rate of computer capability gains will slow in discrete steps over the next century or so. We’ve already seen a slowdown from a need for parallelism, and within the next decade or so we’ll see more slowdown from a need to adapt to 3D chips. Then about 2030 or so we’ll see a big reversibility slowdown due to a need to divide part gains between more operations and using less energy per operation.

Overall though, I doubt the rate of effective gains will slow down by more than a factor of four over the next half century. So, whatever you might have thought could happen in 50 years if Moore’s law had continued steadily, is pretty likely to happen within 200 years. And since brain emulation is already nicely parallel, including with matching memory usage, I doubt the relevant rate of gains there will slow by much more than a factor of two.

If anybody wants to point me to a good source claiming that parallel computing will slow economic impacts, I'd say thanks. I've found a few things like this:

http://www.cs.berkeley.edu/...http://www.economist.com/no...

But seems pretty ambiguous. This early paper seems to suggest many people thought parallel computing would be good. Could it be that expectations are just diminishing to moderate levels from too-high ones?

http://citeseerx.ist.psu.ed...

Die shrinkage decreased cost per transistor - same steps produced more of the more energy efficient transistors. 3D layering does none of that, and as such, is nearly irrelevant (absent dramatic improvements in cost of the process).